#include <REMORA_PhysBCFunct.H>

Public Member Functions | |

| REMORAPhysBCFunct (const int lev, const amrex::Geometry &geom, const amrex::Vector< amrex::BCRec > &domain_bcs_type, const amrex::Gpu::DeviceVector< amrex::BCRec > &domain_bcs_type_d, amrex::Array< amrex::Array< amrex::Real, AMREX_SPACEDIM *2 >, AMREX_SPACEDIM+NCONS+8 > bc_extdir_vals) | |

| Constructor for physical boundary condition functor. | |

| ~REMORAPhysBCFunct () | |

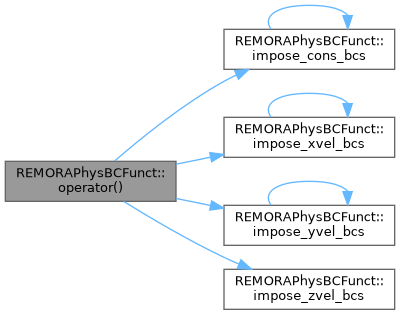

| void | operator() (amrex::MultiFab &mf, const amrex::MultiFab &mask, int icomp, int ncomp, amrex::IntVect const &nghost, amrex::Real time, int bccomp, int n_not_fill=0, const amrex::MultiFab &mf_calc=amrex::MultiFab(), const amrex::MultiFab &mf_msku=amrex::MultiFab(), const amrex::MultiFab &mf_mskv=amrex::MultiFab()) |

| apply boundary condition to mf | |

| void | impose_xvel_bcs (const amrex::Array4< amrex::Real > &dest_arr, const amrex::Box &bx, const amrex::Box &domain, const amrex::GpuArray< amrex::Real, AMREX_SPACEDIM > dxInv, const amrex::Array4< const amrex::Real > &msku, const amrex::Array4< const amrex::Real > &calc_arr, amrex::Real time, int bccomp) |

| apply x-velocity type boundary conditions | |

| void | impose_yvel_bcs (const amrex::Array4< amrex::Real > &dest_arr, const amrex::Box &bx, const amrex::Box &domain, const amrex::GpuArray< amrex::Real, AMREX_SPACEDIM > dxInv, const amrex::Array4< const amrex::Real > &mskv, const amrex::Array4< const amrex::Real > &calc_arr, amrex::Real time, int bccomp) |

| apply y-velocity type boundary conditions | |

| void | impose_zvel_bcs (const amrex::Array4< amrex::Real > &dest_arr, const amrex::Box &bx, const amrex::Box &domain, const amrex::GpuArray< amrex::Real, AMREX_SPACEDIM > dxInv, const amrex::Array4< const amrex::Real > &mskr, amrex::Real time, int bccomp) |

| apply z-velocity type boundary conditions | |

| void | impose_cons_bcs (const amrex::Array4< amrex::Real > &mf, const amrex::Box &bx, const amrex::Box &valid_bx, const amrex::Box &domain, const amrex::GpuArray< amrex::Real, AMREX_SPACEDIM > dxInv, const amrex::Array4< const amrex::Real > &mskr, const amrex::Array4< const amrex::Real > &msku, const amrex::Array4< const amrex::Real > &mskv, const amrex::Array4< const amrex::Real > &calc_arr, int icomp, int ncomp, amrex::Real time, int bccomp, int n_not_fill) |

| apply scalar type boundary conditions | |

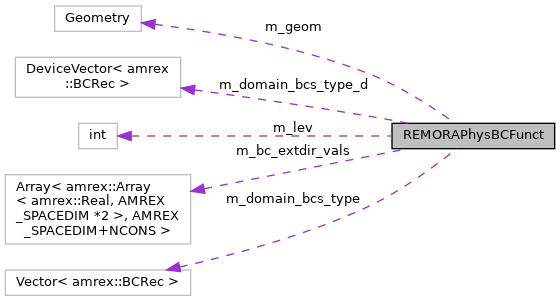

Private Attributes | |

| int | m_lev |

| amrex::Geometry | m_geom |

| amrex::Vector< amrex::BCRec > | m_domain_bcs_type |

| amrex::Gpu::DeviceVector< amrex::BCRec > | m_domain_bcs_type_d |

| amrex::Array< amrex::Array< amrex::Real, AMREX_SPACEDIM *2 >, AMREX_SPACEDIM+NCONS+8 > | m_bc_extdir_vals |

Detailed Description

Definition at line 21 of file REMORA_PhysBCFunct.H.

Constructor & Destructor Documentation

◆ REMORAPhysBCFunct()

|

inline |

Constructor for physical boundary condition functor.

Definition at line 25 of file REMORA_PhysBCFunct.H.

◆ ~REMORAPhysBCFunct()

|

inline |

Definition at line 35 of file REMORA_PhysBCFunct.H.

Member Function Documentation

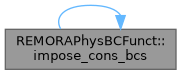

◆ impose_cons_bcs()

| void REMORAPhysBCFunct::impose_cons_bcs | ( | const amrex::Array4< amrex::Real > & | mf, |

| const amrex::Box & | bx, | ||

| const amrex::Box & | valid_bx, | ||

| const amrex::Box & | domain, | ||

| const amrex::GpuArray< amrex::Real, AMREX_SPACEDIM > | dxInv, | ||

| const amrex::Array4< const amrex::Real > & | mskr, | ||

| const amrex::Array4< const amrex::Real > & | msku, | ||

| const amrex::Array4< const amrex::Real > & | mskv, | ||

| const amrex::Array4< const amrex::Real > & | calc_arr, | ||

| int | icomp, | ||

| int | ncomp, | ||

| amrex::Real | time, | ||

| int | bccomp, | ||

| int | n_not_fill | ||

| ) |

apply scalar type boundary conditions

- Parameters

-

[in,out] dest_arr data on which to apply BCs [in] bx box to update on [in] valid_bx valid box [in] domain domain box [in] dxInv pm or pn [in] mskr land-sea mask on rho-points [in] msku land-sea mask on u-points [in] mskv land-sea mask on v-points [in] calc_arr data to use in the RHS of calculations [in] icomp component to update [in] ncomp number of components to update, starting from icomp [in] time current time [in] bccomp index into both domain_bcs_type_bcr and bc_extdir_vals for icomp=0 [in] n_not_fill perimter of cells in x and y where BCs are not applied for non-ext_dir conditions

Definition at line 22 of file REMORA_BoundaryConditions_cons.cpp.

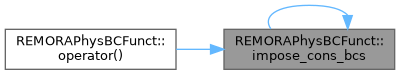

Referenced by impose_cons_bcs(), and operator()().

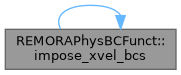

◆ impose_xvel_bcs()

| void REMORAPhysBCFunct::impose_xvel_bcs | ( | const amrex::Array4< amrex::Real > & | dest_arr, |

| const amrex::Box & | bx, | ||

| const amrex::Box & | domain, | ||

| const amrex::GpuArray< amrex::Real, AMREX_SPACEDIM > | dxInv, | ||

| const amrex::Array4< const amrex::Real > & | msku, | ||

| const amrex::Array4< const amrex::Real > & | calc_arr, | ||

| amrex::Real | time, | ||

| int | bccomp | ||

| ) |

apply x-velocity type boundary conditions

- Parameters

-

[in,out] dest_arr data on which to apply BCs [in] bx box to update on [in] domain domain box [in] dxInv pm or pn [in] msku land-sea mask on u-points [in] calc_arr data to use in the RHS of calculations [in] time current time [in] bccomp index into both domain_bcs_type_bcr and bc_extdir_vals for icomp=0

Definition at line 16 of file REMORA_BoundaryConditions_xvel.cpp.

Referenced by impose_xvel_bcs(), and operator()().

◆ impose_yvel_bcs()

| void REMORAPhysBCFunct::impose_yvel_bcs | ( | const amrex::Array4< amrex::Real > & | dest_arr, |

| const amrex::Box & | bx, | ||

| const amrex::Box & | domain, | ||

| const amrex::GpuArray< amrex::Real, AMREX_SPACEDIM > | dxInv, | ||

| const amrex::Array4< const amrex::Real > & | mskv, | ||

| const amrex::Array4< const amrex::Real > & | calc_arr, | ||

| amrex::Real | time, | ||

| int | bccomp | ||

| ) |

apply y-velocity type boundary conditions

- Parameters

-

[in,out] dest_arr data on which to apply BCs [in] bx box to update on [in] domain domain box [in] dxInv pm or pn [in] mskv land-sea mask on v-points [in] calc_arr data to use in the RHS of calculations [in] time current time [in] bccomp index into both domain_bcs_type_bcr and bc_extdir_vals for icomp=0

Definition at line 16 of file REMORA_BoundaryConditions_yvel.cpp.

Referenced by impose_yvel_bcs(), and operator()().

◆ impose_zvel_bcs()

| void REMORAPhysBCFunct::impose_zvel_bcs | ( | const amrex::Array4< amrex::Real > & | dest_arr, |

| const amrex::Box & | bx, | ||

| const amrex::Box & | domain, | ||

| const amrex::GpuArray< amrex::Real, AMREX_SPACEDIM > | dxInv, | ||

| const amrex::Array4< const amrex::Real > & | mskr, | ||

| amrex::Real | time, | ||

| int | bccomp | ||

| ) |

apply z-velocity type boundary conditions

- Parameters

-

[in,out] dest_arr data on which to apply BCs [in] bx box to update on [in] domain domain box [in] dxInv pm or pn [in] mskr land-sea mask on rho-points [in] calc_arr data to use in the RHS of calculations [in] time current time [in] bccomp index into both domain_bcs_type_bcr and bc_extdir_vals for icomp=0

Based on BCRec for the domain, we need to make BCRec for this Box

Definition at line 17 of file REMORA_BoundaryConditions_zvel.cpp.

Referenced by operator()().

◆ operator()()

| void REMORAPhysBCFunct::operator() | ( | amrex::MultiFab & | mf, |

| const amrex::MultiFab & | mask, | ||

| int | icomp, | ||

| int | ncomp, | ||

| amrex::IntVect const & | nghost, | ||

| amrex::Real | time, | ||

| int | bccomp, | ||

| int | n_not_fill = 0, |

||

| const amrex::MultiFab & | mf_calc = amrex::MultiFab(), |

||

| const amrex::MultiFab & | mf_msku = amrex::MultiFab(), |

||

| const amrex::MultiFab & | mf_mskv = amrex::MultiFab() |

||

| ) |

apply boundary condition to mf

- Parameters

-

[in,out] mf multifab to be filled [in] msk land/sea mask for the variable [in] icomp index into the MultiFab – if cell-centered this can be any value from 0 to NCONS-1, if face-centered can be any value from 0 to 2 (inclusive) [in] ncomp number of components – if cell-centered this can be any value from 1 to NCONS as long as icomp+ncomp <= NCONS-1. If face-centered this must be 1 [in] nghost how many ghost cells to be filled [in] time time at which the data should be filled [in] bccomp index into both domain_bcs_type_bcr and bc_extdir_vals for icomp = 0 so this follows the BCVars enum [in] n_not_fill halo size to not fill [in] mf_calc data to use in calculation of RHS [in] mf_msku land-sea mask at u-points [in] mf_mskv land-sea mask at v-points

Definition at line 20 of file REMORA_PhysBCFunct.cpp.

Member Data Documentation

◆ m_bc_extdir_vals

|

private |

Definition at line 74 of file REMORA_PhysBCFunct.H.

Referenced by impose_cons_bcs(), impose_xvel_bcs(), impose_yvel_bcs(), and impose_zvel_bcs().

◆ m_domain_bcs_type

|

private |

Definition at line 72 of file REMORA_PhysBCFunct.H.

Referenced by impose_cons_bcs(), impose_xvel_bcs(), impose_yvel_bcs(), and impose_zvel_bcs().

◆ m_domain_bcs_type_d

|

private |

Definition at line 73 of file REMORA_PhysBCFunct.H.

◆ m_geom

|

private |

Definition at line 71 of file REMORA_PhysBCFunct.H.

Referenced by impose_cons_bcs(), impose_xvel_bcs(), impose_yvel_bcs(), impose_zvel_bcs(), and operator()().

◆ m_lev

|

private |

Definition at line 70 of file REMORA_PhysBCFunct.H.

The documentation for this class was generated from the following files:

- /home/runner/work/REMORA/REMORA/Source/BoundaryConditions/REMORA_PhysBCFunct.H

- /home/runner/work/REMORA/REMORA/Source/BoundaryConditions/REMORA_BoundaryConditions_cons.cpp

- /home/runner/work/REMORA/REMORA/Source/BoundaryConditions/REMORA_BoundaryConditions_xvel.cpp

- /home/runner/work/REMORA/REMORA/Source/BoundaryConditions/REMORA_BoundaryConditions_yvel.cpp

- /home/runner/work/REMORA/REMORA/Source/BoundaryConditions/REMORA_BoundaryConditions_zvel.cpp

- /home/runner/work/REMORA/REMORA/Source/BoundaryConditions/REMORA_PhysBCFunct.cpp